Computer Vision

Computer vision is a set of techniques that deal with how computational processes can obtain high-level information from digital images or videos, trying to understand and automate tasks that the human visual system is capable of performing.

These techniques include acquiring, processing, analyzing, and “understanding” images in order to produce numerical information that can be processed by a computer. In this context, the process of understanding must be understood as the transformation of images into symbolic information through the use of techniques based on geometry, physics, statistics, or learning techniques.

The field of applications of computer vision is extensive and includes, for example:

- Images classification: This is the process of distinguishing whether an image belongs to a class or not. For example, distinguish between images that contain road signs from images that do not contain them.

- Semantic segmentation of images: It consists of dividing the image into different areas that have different semantic meanings, for example, distinguishing between people and cars in a given image.

- Computational photography: These are the techniques that extend the capabilities of digital photography, resulting in an ordinary photograph, but that could not have been taken with a traditional camera. For example, the techniques of taking images with high dynamic range by smartphones.

- Facial recognition: It is the identification of people in digital images, for example, to unlock smartphones.

- Optical flow: It is the detection of the movement pattern of different objects between consecutive images having found the relative movement between the observer and the scene.

- Calibration of cameras and images: It is the search for the relationship between the position of the pixel on the camera sensor and the position of the point in the scene and in the plane of the image, for example, to calculate distances between the sensor and the objects in the scene.

Normally, a computer vision system can include several of these techniques and use them together to extract as much information as possible from the images captured.

An example of a computer vision system that includes several of these techniques are systems developed for autonomous vehicle driving, where the system must be able to:

- Identify the road signs in the scene and interpret them (segmentation and classification of images).

- Identify the rest of the elements of the scene: road, road markings, vehicles, etc. (segmentation of images).

- Calculate distances to the different objects in the scene (image calibration techniques).

- Calculate the relative motion of moving elements in the scene (optical flow).

It is the result of all these techniques that allows an autonomous driving system to drive the vehicle safely.

Point Clouds

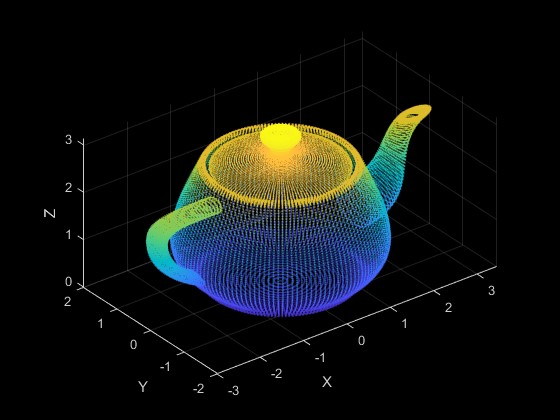

Although in general, we have talked about images as the input data of computer vision techniques, there are models within this area that work with point clouds.

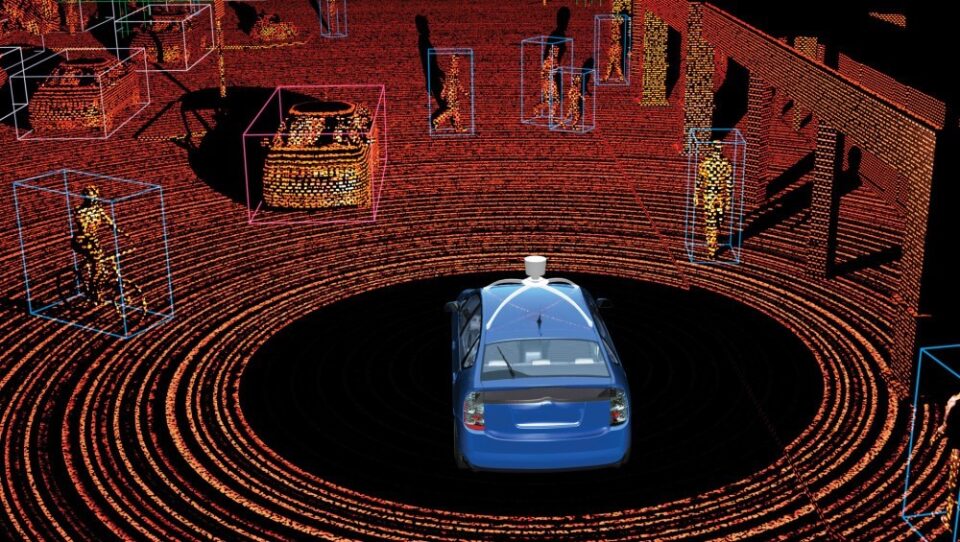

Point clouds are a set of points identified by their X, Y, Z coordinates, which represent the outer surfaces of objects. Typically these clouds are obtained by three-dimensional scanners, such as LiDAR.

Returning to the example of autonomous vehicles, many include LiDAR-type radars that allow point clouds to be obtained from the environment and use this information to improve navigation.

Semantic Segmentation and Classification of Point Clouds

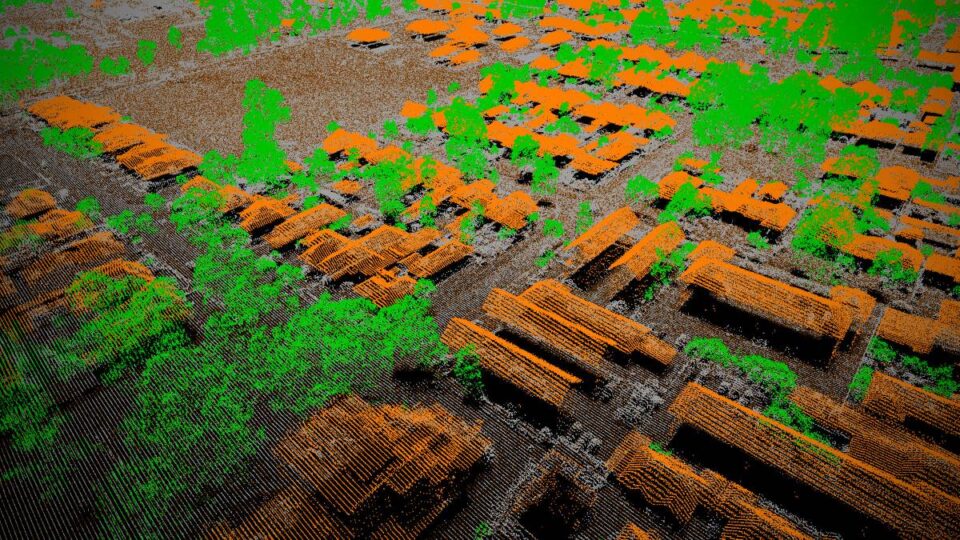

The exploitation of these point clouds to segment information and classify the different objects detected in it is one of the techniques that has recently received more attention in the world of computer vision.

This segmentation consists of finding and classifying homogeneous groups of points, in such a way that the points belonging to the same group share the same properties.

The treatment of these point clouds to carry out the process of semantic segmentation and classification is complicated since it requires having previously classified point clouds so that computer vision systems can learn to segment the different objects and classify them correctly.

This manual classification process is one of the great challenges of the vast majority of computer vision applications because they need a broad and labeled data set to learn the relationship between the information extracted from the images and the labels that are to be obtained.

In the case of point clouds, we face additional challenges since these data sets usually present a high number of points, sometimes tens of millions, that present a high level of redundancy, representing a high number of points on the same object.

There is also usually an imbalance in the sampling density of the points since the LiDAR is not able to scan with the same frequency all the areas of the environment according to the angle, the relative position of the object, or the distance to it.

Advances in Segmentation and Classification

This problem is applicable in the driving of autonomous vehicles, but also has other applications such as:

- Forest surveys, to identify the growth of vegetation and its progress.

- Infrastructure maintenance, to identify the state of hard-to-reach infrastructures.

- Generation of maps, both of buildings, and of other elements to carry out research.

- Generation of classified three-dimensional scenes, to obtain virtual maps of environments.

In all these applications, the techniques linked to deep learning are those that allow obtaining better results and new techniques are being proposed that show good results in public data sets and benchmarks, such as the following works.

Kpconv: Flexible and deformable convolution for point clouds (Thomas, H. et al, 2019), which at the time of its publication, presented the best results obtained so far in four public datasets: ModelNet40, ShapeNetPart, Semantic3D, and S3DIS. They applied segmentation and classification of point clouds from both outdoor and indoor scenes.

Subsequently, the work of Randla-net: Efficient semantic segmentation of large-scale point clouds (Hu, Q. et al., 2020) managed to improve the results of the previous one in the vast majority of data sets and very good results in the SemanticKITTI dataset where the detection of objects by an autonomous vehicle is simulated, as can be seen in the following video:

Finally, it is also worth mentioning the work of Point Transformer (Zhao, H., et al., 2021) that improves the results of both previous works through the use of Transformers, a supervised learning technique that is offering excellent results in many application areas such as natural language processing or computer vision and that in this case demonstrates the suitability of its application in the processing of three-dimensional point clouds.

As can be seen, the field is in full swing. Every year new approaches are proposed that allow obtaining and improving the results obtained so far and that, if continued at this rate, will allow the availability of new techniques that allow the use of segmentation and classification of point clouds in applications of daily use, and will make autonomous vehicles a reality.